Enterprise resource planning (ERP) projects haven’t historically leant themselves to the use of agile philosophies and techniques. The concepts of variable scope, experimentation, a “minimum viable product”( MVP), and frequent small releases are more readily aligned to consumer-facing apps and digital offerings. But evolving requirements and the need for more frequent change are increasingly seen as the norm in ERP projects, as organisations are having to adapt to accommodate increased regulatory demands, security challenges, and the need to integrate their digital and back-office estates - as well as to cope with organic growth and the pressure to replace legacy systems.

Perhaps you’ve read how ‘digital native’ companies like Spotify and Uber deliver their technology-enabled change with agile? Maybe you’ve tried it for yourself in a few bespoke builds around your organisation, and you’ve got a feel for it and the benefits it brings.

But which parts of agile – tools, methods, techniques, organisational constructs – work best in an ERP project?

The ERP conundrum

One big problem with ERP projects is the business not agreeing upfront on what good looks like.

Getting total clarity up-front around what’s needed can be incredibly difficult. A waterfall approach to ERP forces you to commit to something at the start, not knowing how or whether it will work at the end.”

It leaves you with no scope to deviate, even when it appears things aren’t right. Meanwhile, months down the line, the business strategy will inevitably have changed, shifting your business requirements with it. Because waterfall relies on a sequence of steps – requirements, design, development, acceptance, deployment, maintenance – and not moving on until you’ve completed each, no working software is produced until late in the process. It lets you fix some defects discovered during testing. But it’s near impossible to add anything that wasn’t a requirement in the first place.

Here are some things that can be done differently…

Narrow the scope

Challenge the business to think hard right from the start, when you’re defining requirements, about what’s an acceptable MVP. What is the subset of requirements – and benefits – that are absolutely vital for go-live? Get the business to commit to this and you can create a delivery plan around it. Then, add a wish list of nice-to-haves – for example, user experience on a par with the best consumer apps. After the MVP goes live, you can deliver these extras in releases or through continuous improvement.

Keep in mind that users seeing their wants on the requirements and wish lists shows you’ve listened to them, and it helps build buy-in. It’s far easier to start design-build-test phases focused on an MVP, rather than to try to cut back to an MVP when the delivery is under pressure and you need to complete build and test.

Adopt, not adapt

Start with a commercial off-the-shelf ERP system that’s pre-configured for your industry. This will allow you to quickly build a model system. You can use this to show how it would satisfy your business’s needs, assess the business change impact and agree where small customisations might be needed.

The temptation is to adapt and customise a system to fit the way your business works. After all, given that change is unsettling, the option to avoid it often looks more favourable. But large-scale customisation adds complexity and increases ownership costs over the long term. As the business grows, you’ll need the ERP system to evolve with it. But once customised, it becomes harder to keep up to date.

Iterative and incremental

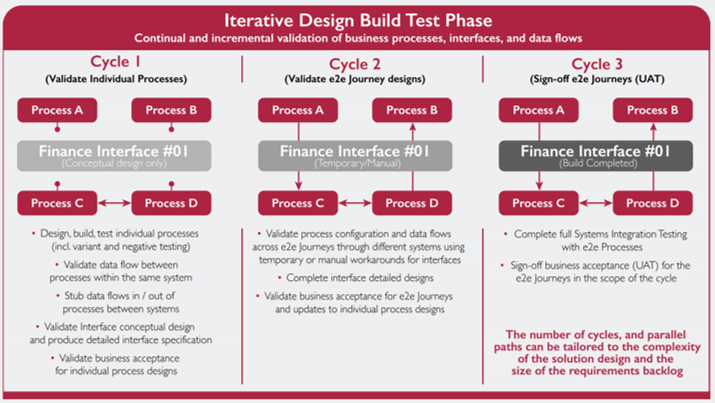

Use a sequence of iterative cycles – design, build, demo, test, repeat – to validate how well the system does what you need it to do.”

By taking an iterative delivery approach – incremental build, though without necessarily releasing the results into production – can front-load managing the risk of change. Frequently showing the results to real users and getting their feedback helps develop confidence in the design before you get too far into the large-scale build and test phases. You also get to validate parts of the system as you move forward and the early, continuous gains in acceptance reduce the chances that you’ll need expensive functional or technical changes later on.

When delivering end-to-end business processes across functions, expect the build and test phases to identify defects in the design and gaps in the requirements. With iterative design-build-test cycles, you can validate the processes in layers of increasing complexity with each iteration.

Design-build-test cycles

Take an interface for Finance. In the first cycle, you’d look at conceptual design and produce a detailed specification. In the second, using a temporary or manual version of the interface, you’d use simulated data flows to verify the specification, then fine-tune the detailed design. And in, say, a third, you’d do systems integration testing and sign off the built interface. This allows you to test data flows across the system sooner and correct these, if needed, with a lower cost of change. Follow-on design-build-test cycles then validate the finished build.

Once you’ve iteratively proved the processes and know what data will be there, the overall system build and management reporting can follow a waterfall approach. In effect, you’ll have fitted a waterfall approach to deliver the interface around an iterative design-build-test phase of the processes.

Iterative cycles are used to confirm the waterfall stages of going into development. Having tested and proved the processes and data flows using simulated interfaces, you may need to change the interface design. Simply specify any change before you develop it, which is cheaper because at this stage you’ve used simulated interfaces. In contrast, with waterfall alone the need for changes to the interface, for example, to accommodate different data flows or processes, wouldn’t become known until system integration testing –after it’s been built.

Do the heavy lifting upfront

Don’t jump straight into iterative cycles. Some high-level design needs to be done upfront in order to establish an architecture for the whole system, and to understand how the iterative cycles will come together. It’s important to know what data the business has and where it will be used across the system. Then, when the iterative cycles begin, there’s a single model that can reference the various strands of work.

It’s important to do this and then categorise the requirements into an MVP and a wish list, before starting the first design-build-test cycle. It’s the only way to know what cycles will be needed and to establish an over-all plan.

Implications for test phases

These iterative cycles also mean that some activities traditionally left until system, integration or acceptance test phases can happen much earlier in the life-cycle. In fact, you’ll do user acceptance testing at the point where the business has verified a part of the system and it gets built."

You could performance test isolated parts sooner, after the design and build of those sections is signed off. It presents an opportunity to de-risk other test phases, identify technical issues and verify roles earlier. This minimises the chances of finding big technical problems late in the day.

Clear contracting

Make sure your contracting holds your suppliers accountable for timely delivery. Contract around the delivery of an MVP and, for example, options around add-on functionality. This will help keep the project moving forwards. Ideally, aim for an output-based measurement and price for each cycle. Using a time and materials alternative makes it harder to hold suppliers to delivering defined pieces of work within specific time frames.

The earlier you model and start to build the system in stages, the earlier technical and functional confidence can be built. Having seen it and trialled it, the business accepts it earlier, too. In contrast, with waterfall you get a paper-based acceptance at first and then confidence from the design as the build comes together.

But it’s not until user acceptance testing that the business gets asked the critical question: can you run your business with this system? If the answer is ‘no’, that means a lot of expensive rework. Finding that out early in the process can make the difference between success and failure of the entire project.